Sharks, Deer, and Risk

Here’s a question: Which animal poses the greater risk to the average person, a deer or a shark?

Most people’s initial reaction (mine included) to that question is to answer that the shark is the more dangerous animal. Statistically speaking, the average American is much more likely to be killed by deer (due to collisions with vehicles) than by a shark attack. Truly accurate statistics for deer collisions don’t exist, but estimates place the number of accidents in the hundreds of thousands. Millions of dollars worth of damage are caused by deer accidents, as are thousands of injuries and hundreds of deaths, every year.

Shark attacks, on the other hand, are much less frequent. Each year, approximately 50 to 100 shark attacks are reported. “World-wide, over the past decade, there have been an average of 8 shark attack fatalities per year.”

It seems clear that deer actually pose a greater risk to the average person than sharks. So why do people think the reverse is true? There are a number of reasons, among them the fact that deer don’t intentionally cause death and destruction (not that we know of anyway) and they are also usually harmed or killed in the process, while sharks directly attack their victims in a seemingly malicious manner (though I don’t believe sharks to be malicious either).

I’ve been reading Bruce Schneier’s book, Beyond Fear, recently. It’s excellent, and at one point he draws a distinction between what security professionals refer to as “threats” and “risks.”

A threat is a potential way an attacker can attack a system. Car burglary, car theft, and carjacking are all threats … When security professionals talk abour risk, they take into consideration both the likelihood of the threat and the seriousness of a successful attack. In the U.S., car theft is a more serious risk than carjacking because it is much more likely to occur.

Everyone makes risk assessments every day, but most everyone also has different tolerances for risk. It’s essentially a subjective decision, and it turns out that most of us rely on imperfect heuristics and inductive reasoning when it comes to these sorts of decisions (because it’s not like we have the statistics handy). Most of the time, these heuristics serve us well (and it’s a good thing too), but what this really ends up meaning is that when people make a risk assessment, they’re basing their decision on a perceived risk, not the actual risk.

Schneier includes a few interesting theories about why people’s perceptions get skewed, including this:

Modern mass media, specifically movies and TV news, has degraded our sense of natural risk. We learn about risks, or we think we are learning, not by directly experiencing the world around us and by seeing what happens to others, but increasingly by getting our view of things through the distorted lens of the media. Our experience is distilled for us, and it’s a skewed sample that plays havoc with our perceptions. Kids try stunts they’ve seen performed by professional stuntmen on TV, never recognizing the precautions the pros take. The five o’clock news doesn’t truly reflect the world we live in — only a very few small and special parts of it.

Slices of life with immediate visual impact get magnified; those with no visual component, or that can’t be immediately and viscerally comprehended, get downplayed. Rarities and anomalies, like terrorism, are endlessly discussed and debated, while common risks like heart disease, lung cancer, diabetes, and suicide are minimized.

When I first considered the Deer/Shark dilemma, my immediate thoughts turned to film. This may be a reflection on how much movies play a part in my life, but I suspect some others would also immediately think of Bambi, with it’s cuddly cute and innocent deer, and Jaws, with it’s maniacal great white shark. Indeed, Fritz Schranck once wrote about these “rats with antlers” (as some folks refer to deer) and how “Disney’s ability to make certain animals look just too cute to kill” has deterred many people from hunting and eating deer. When you look at the deer collision statistics, what you see is that what Disney has really done is to endanger us all!

Given the above, one might be tempted to pursue some form of censorship to keep the media from degrading our ability to determine risk. However, I would argue that this is wrong. Freedom of speech is ultimately a security measure, and if we’re to consider abridging that freedom, we must also seriously consider the risks of that action. We might be able to slightly improve our risk decisionmaking with censorship, but at what cost?

Schneier himself recently wrote about this subject on his blog. In response to an article which argues that suicide bombings in Iraq shouldn’t be reported (because it scares people and it serves the terrorists’ ends). It turns out, there are a lot of reasons why the media’s focus on horrific events in Iraq cause problems, but almost any way you slice it, it’s still wrong to censor the news:

It’s wrong because the danger of not reporting terrorist attacks is greater than the risk of continuing to report them. Freedom of the press is a security measure. The only tool we have to keep government honest is public disclosure. Once we start hiding pieces of reality from the public — either through legal censorship or self-imposed “restraint” — we end up with a government that acts based on secrets. We end up with some sort of system that decides what the public should or should not know.

Like all of security, this comes down to a basic tradeoff. As I’m fond of saying, human beings don’t so much solve problems as they do trade one set of problems for another (in the hopes that the new problems are preferable the old). Risk can be difficult to determine, and the media’s sensationalism doesn’t help, but censorship isn’t a realistic solution to that problem because it introduces problems of its own (and those new problems are worse than the one we’re trying to solve in the first place). Plus, both Jaws and Bambi really are great movies!

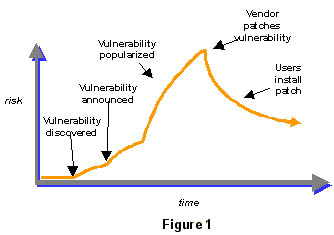

Phase 1 is before the vulnerability is discovered. The vulnerability exists, but no one can exploit it. Phase 2 is after the vulnerability is discovered, but before it is announced. At that point only a few people know about the vulnerability, but no one knows to defend against it. Depending on who knows what, this could either be an enormous risk or no risk at all. During this phase, news about the vulnerability spreads — either slowly, quickly, or not at all — depending on who discovered the vulnerability. Of course, multiple people can make the same discovery at different times, so this can get very complicated.

Phase 1 is before the vulnerability is discovered. The vulnerability exists, but no one can exploit it. Phase 2 is after the vulnerability is discovered, but before it is announced. At that point only a few people know about the vulnerability, but no one knows to defend against it. Depending on who knows what, this could either be an enormous risk or no risk at all. During this phase, news about the vulnerability spreads — either slowly, quickly, or not at all — depending on who discovered the vulnerability. Of course, multiple people can make the same discovery at different times, so this can get very complicated.